Optimizing the Upgrade and Purchase Funnel

Role: Product Designer owning the upgrade, trial, and purchase strategy and experiment execution across products

Team: Led design within a cross-functional group of 13 engineers and a PM

Outcomes: 16 experiments shipped → +9,300 annualized customers successfully onboarded

January–September 2025

Project Goal

Define the optimal Atlassian customer upgrade, trial, and buy experience, and breakdown the vision into experiments.

Customer problem

Who are we designing for?

While many roles are involved in purchase decisions, this project specifically targeted admins who have the ability to upgrade and pay for their team. These admins oversee product configuration, evaluation, and user expansion.

What problems do they currently face?

It’s not clear what edition customers are on

Admins are being jumped between tabs and out of context

Admins lack the info they need for informed decisions in product (e.g. differences between plans and their value), forcing them to waste time searching in other sources for pricing and feature details

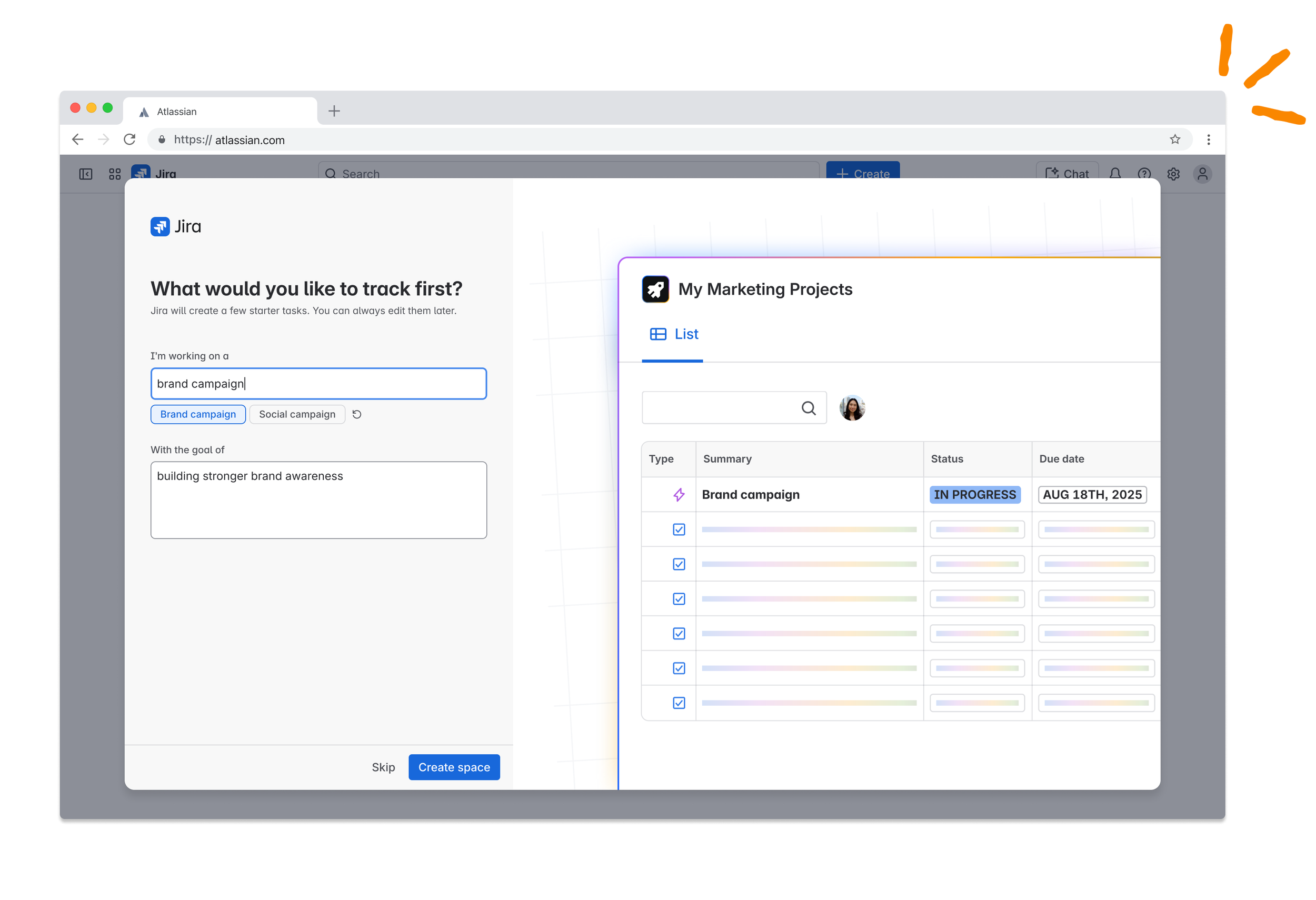

{insert image of problem}

Business problem

We noticed a significant drop-off in the purchase funnel and low trial-to-paid conversions, with 64% of users drop-off during the upgrade flow and 98% of users not adding payment during or after the trial.

In addition, there were inconsistent trial experiences and branding across products and surfaces. These inconsistencies created inefficiencies for our teams, and we were losing revenue and business impact due to these fragmented flows.

Core hypothesis

If we consolidate key trial and edition awareness experiences, then we can design more efficiently and drive more upgrades and purchases because there will be less fragmentation, and customers will have more compelling, less confusing experiences across our products.

Success would be measured by our primary OKR of increasing purchases. We’d attach metric goals to each experiment to measure success more directly, (e.g. 15% increase in trial starts or purchases).

Design spike

With this foundation, I led a week-long spike to define our vision and roadmap. I brought together the various teams working on these journeys with the goal of:

Building a shared understanding of our current state

Learning from competitor patterns

Defining a north star experience together

I started by having each team map pain points and gaps over the current experiences.

{insert image}

We then individually brainstormed opportunity areas based on the pain points. I grouped the ideas into themes.

{insert image}

The themes translated into design principles, which became the key customer questions that we aimed to answer as part of our work:

1. What’s this? Clearly communicate the current plan and what it includes.

2. Why do I care? Clearly show why someone should trial or upgrade.

3. What do I do next? Provide a clear next step, grounded in customer needs.

Design

I explored different directions and tested with customers. I then broke the vision into experiments that could be delivered incrementally based on the control experiences.

I began with low-fidelity explorations

Then translated them into higher fidelity designs

And put them in front of customers who were directly involved in the evaluation and purchasing process

What did I learn from research?

{insert image}

Final E2E flow

{insert images}

Outcomes & impact

Delivered the north star trial vision

Broke it into 11 experiments

Design & shipped 7 experiments that led to +x annualized business customers to start trials and purchase.

Learning

1. Translate design into business outcomes. Initially, I framed this around design inconsistencies. Once I tied it to data and measurable business goals, it unlocked prioritization.

2. Understand why something doesn’t work. We had early experiments that didn’t perform. The metrics didn’t tell the full story, so I ran customer interviews to uncover unmet expectations and refine our approach.

3. Strategic experimentation. Know when to go big or small. With long experiment runtimes and smaller audiences, I learned when to isolate variables to drive learning—and when to take bold swings backed by confidence, data, and business momentum.